Generative AI: 26 Keywords You Need to Know in 2026

Generative AI is one of the most exciting and innovative fields of artificial intelligence, with applications ranging from art and music to medicine and engineering. But what exactly is generative AI, and what are some of the key terms and concepts you need to know to understand it better? In this article, we will explain 12 of the most common and important keywords related to generative AI and its recent developments.

Why this is Necessary?

The primary purpose of this glossary is to help people understand how AI systems work. With the rapid development of new models, apps, software, and AI-enhanced devices, having a fundamental grasp of these keywords will make it easier to comprehend these advancements.

Moreover, data shows that generative AI is already significantly impacting the economy in various ways. Staying updated with the latest developments is crucial, but first, you need to know the basics!

Keywords

The keywords start at the foundations and then build up in difficulty so enjoy!

Note: This list is not comprehensive.

1. Generative AI

Generative AI refers to a type of artificial intelligence that creates new, original content, such as text, images, or music, based on patterns and examples it has learned from existing data.

2. LLM

LLM commonly stands for “Large Language Model.” It refers to advanced AI models, like GPT-3, designed to understand and generate human-like text on a large scale, enabling a broad range of natural language processing tasks.

They are trained on large datasets of text which allow them to predict the next word in a sentence. By learning from a large and diverse corpus of text, these models can capture general linguistic patterns and common sense knowledge, but they may also inherit biases and errors from the data.

Examples include:

GPT-3 and GPT-4 by OpenAI (Most Popular)

Llama 1 and 2 by Meta (Open source)

Bard by Google

3. Chatbot

A chatbot is a type of computer program, often employing generative AI or large language models, that engages in conversation with users. It uses natural language processing to comprehend and respond to user input, serving purposes like providing information or assistance.

4. GPT

GPT, short for Generative Pre-trained Transformer, is a powerful language model. It creates human-like text based on patterns learned from diverse data, showcasing advanced natural language processing capabilities.

Most AI applications such as chatbots or image generators will be built on top of this model but not all.

5. Prompt

A prompt is a specific input or request given to a generative AI, like GPT, to elicit a response or generate content in a conversational manner, as seen in chatbots.

It is the main way to interact with a LLM but we also input files, images and even audio depending on what model you are using.

6. Prompt Engineering

Prompt engineering involves strategically crafting inputs or queries to guide generative AI models in producing desired outputs. It’s a technique to influence the responses of systems like chatbots or language models like GPT.

Some examples of prompt engineering techniques include:

Few-shot prompting

ReAct prompting

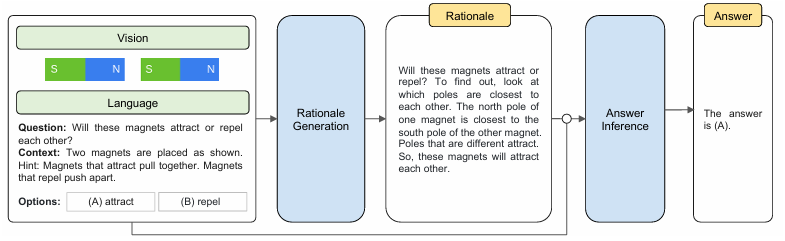

Chain-of-thought (CoT)

7. Tokens

Tokens, in the context of language models, are individual units of meaning such as words or characters. They form the basis for comprehension and generation within generative AI, like GPT, when processing text.

You can use OpenAI’s tokenizer to see how many tokens you are using for a prompt.

Generate a recipe list for organic and meat-free dishes for a family of 5. Ensure there are no nuts present in any of recipes and include details about macronutrients.

So 29 words is equivalent to around 36 tokens. The conversions will vary depending on which model you are using.

8. Context Window / Context Length

A context window, or context length, in the realm of language models, refers to the number of preceding words or tokens that a model considers when generating or understanding a specific word or token in a sequence of text.

Most recently, OpenAI announced their new GPT-4 Turbo which has a context window of 128,000 which is equivalent to around 300 pages of text.

9. Fine-Tuning

A fine-tuned model is an AI model that has undergone additional training on specific data or tasks after its initial pre-training. This process refines the model’s performance for particular applications or domains.

Common use cases of fine-tuning include creating specialized chatbots that you might see in your mobile banking app or when online shopping.

NatWest recently announced a partnership with IBM to help develop more human-like chatbots using generative AI which will likely incorporate fine-tuning of NatWest’s financial intelligence data to achieve this.

10. Attention Mechanism

An attention mechanism in AI refers to a component that enables models to focus on specific parts of input data when making decisions or generating outputs. It helps capture relevant information, enhancing performance.

11. Temperature

Temperature is a common prompt engineering parameter that influences the randomness of generated outputs. Higher values increase randomness, producing more diverse responses, while lower values result in more deterministic and focused outputs.

For example, suppose we want to generate a sentence that starts with “The sky is”. If we use a low temperature value, such as 0.1, the model might produce something like “The sky is blue and clear”. This is a reasonable and common sentence, but not very creative. If we use a high temperature value, such as 1.0, the model might produce something like “The sky is a canvas of dreams”.

12. Embeddings

Embeddings are numerical representations of words or entities in a way that captures their semantic relationships. They enable AI models, like language models, to understand and process language effectively.

Below is an example of how a sentence might be decomposed into a vector.

13. Retrieval Augmented Generation (RAG)

Retrieval Augmented Generation allows us to provide additional context in our prompt by automatically sourcing relevant information from a vector database to be injected into your prompt at inference.

Your initial prompt might look like this

“How do you feel about Donald Trump being president?”

With RAG, the prompt that actually reaches the LLM might look like this.

“How do you feel about Donald Trump being president?” — Use the following information to answer the question: As of December 2024, Donald Trump has been elected as the next President of the United States, defeating Kamala Harris in the 2024 election. He is set to take office in January 2025, marking his second term as President. Trump has announced plans to impose significant tariffs on goods imported from Canada, Mexico, and China.

The latter should illicit a more informed response from the AI.

RAG is the standard approach to providing additional context. It involves integrating a vector database and a retrieval mechanism to find relevant text your prompt/query.

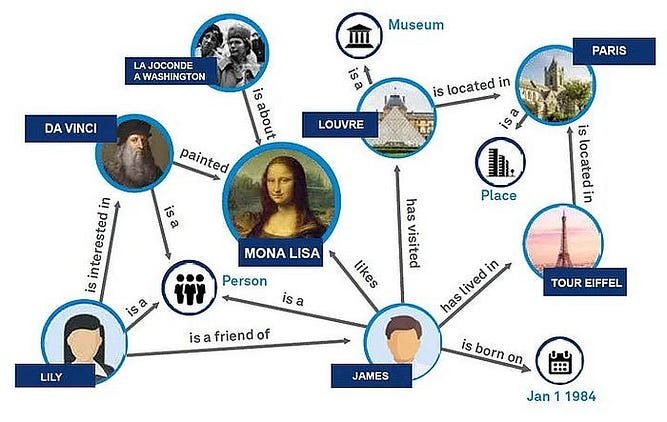

14. Graph Retrieval Augmented Generation

This advanced technique enhances the traditional Retrieval Augmented Generation (RAG) by leveraging graph data structures. Graph RAG uses nodes and edges to represent and retrieve information, allowing for more accurate and contextually relevant responses.

By incorporating relationships and connections between data points, Graph RAG improves the quality and coherence of generated content.

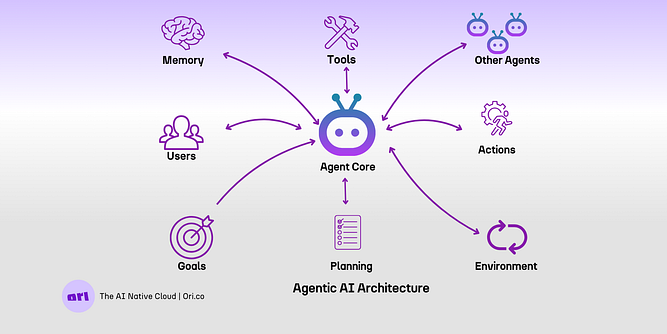

15. AI Agent

An AI agent is an intelligent entity integrated into systems like RAG to perform specific tasks autonomously. These tasks can include document retrieval, summarization, response generation, and more. AI agents enhance the efficiency and adaptability of AI systems by handling complex operations and providing specialized support based on the context and requirements of the task at hand.

16. Autonomous Agents

Agents that are capable of planning, executing and evaluating tasks with minimal human input or prompting. Acting as operators instead of assistants. Examples include OpenAIs Codex, and Claude Code.

17. Memory-Augmented Models

Models with persistent long-term memory that store and retrieve information across sessions, enabling continuity and personalization. Unlike standard models that "forget" everything once a chat ends, these systems build a user profile over time to provide deeper context.

Examples include:

Asyra AI: Our proprietary system designed to evolve alongside the user by archiving past interactions and preferences.

ChatGPT (Memory Feature): OpenAI’s implementation that allows the model to remember specific details—like your preferred coding language or family members—across separate conversations.

MemGPT: A research-led framework that manages "infinite" context by treating LLM context windows like RAM and external databases like a hard drive.

Claude (Project Knowledge): Anthropic’s way of allowing users to upload core "permanent" documents that the AI treats as a primary memory bank for all future tasks.

18. Multimodal Reasoning

Unified reasoning across text, images, audio, code, and actions — not just multimodal input but integrated cognition.

19. Workflow Orchestration

AI systems that coordinate tools, APIs, and agents — effectively acting as the “operating system” for business processes.

20. Edge AI

Running powerful models locally on phones, laptops, and wearables for privacy, speed, and offline capability. Meta AI glasses are a great example of this bringing real‑time multimodal reasoning directly onto a wearable device without relying on constant cloud access.

21. Synthetic Data

Not exactly a new term especially to people research and developing in the field but to more beginners in AI or consumers, its still a relatively new term.

Synthetic data refers to data generated by AI models and/or LLMs. Examples include:

Using LLMs to generate training examples for fine-tuning (e.g. customer support dialogues, classification labels, edge-case scenarios)

Creating synthetic images for computer-vision tasks such as autonomous driving or medical imaging

Generating structured datasets that mimic real-world distributions and properties

Waymo, Tesla, and other autonomous-driving providers combine synthetic data with real-world examples to refine their algorithms. You can expect synthetic data to become more prevalent as AI models improve at generating realistic data.

22. Context-Aware Interfaces

Interfaces that adapt to user interests or intent, environment and history — moving beyond static UI into dynamic, user-shaped experiences. For example, a context‑aware interface might:

Surface tools you frequently use at specific times of day

Reconfigure layouts based on your workflow patterns

Anticipate next steps in a task and proactively offer shortcuts

Shift tone, complexity, or modality (text, voice, visual) based on your behaviour

Integrate environmental signals — location, device, motion, or ambient context — to adjust what it shows or how it responds

23. Thinking and Reasoning Models

A reasoning model is a type of AI designed to “think” before it speaks. Unlike standard LLMs that predict the next word in a sequence based on probability, reasoning models use techniques like Chain-of-Thought (CoT) to break complex problems into logical steps internally before delivering a final answer. This significantly reduces hallucinations and improves performance in math, coding, and deep strategy.

Examples Include:

OpenAI GPT-5 Series Models: The first major model designed to spend more time “thinking” to solve difficult science and math problems.

DeepSeek-R1: An open-weights model that uses reinforcement learning to achieve high-level reasoning capabilities. It took the world by storm when it first released to the public in early 2025

Gemini 3 (Internal Thinking): Google’s multimodal approach to internal deliberation and logical verification.

Interestingly, the internal thinking process for Google’s Gemini 3 was reportedly leaked and it’s amazing the amount of different operations, verifications and enhancements that are added to the response process without sacrificing latency.

24. World Model

While LLMs predict the next word, World Models are AI systems designed to understand the underlying physical or logical “rules” of an environment.

Popularized by research in autonomous driving (like Waymo) and robotics, a world model allows an AI to simulate potential outcomes before taking action. In 2026, these models are likely to start having more of an impact on gaming experiences and beyond into generative business simulations, where an AI can “imagine” the impact of a market shift before suggesting a strategy.

25. In-Context Learning

Companies are moving away from fine-tuning and towards retrieval-augmented ICL because it’s cheaper, safer and easier to monitor. It refers to any sort of example provided to the model included in your prompt, instructions and guidance on how to use tools or similar. Strategies include:

Chain-of-thought prompting

Self-consistency sampling

Tool-use demonstrations

Task decomposition via examples

26. Generative Engine Optimization

As users move away from traditional search engines toward AI-powered “Generative Engines” (like ChatGPT, Perplexity, or Gemini), the marketing world is shifting from SEO to GEO. This is the practice of optimizing content so that it is more likely to be cited, summarized, and recommended by an AI model.

In 2026, brands are focused on “AI Share of Voice” — ensuring their data is structured in a way that AI agents can easily retrieve and present it to users during a conversation.